Blog #3

Algorithmic Efficiency

Big O, Omega, and Theta notations

Describing the Asymptotic Behavior of Algorithms

March 7th, 2023As computer programs become increasingly complex and data sets continue to grow in size, it becomes more important to understand the performance characteristics of algorithms. This is where Big O notation, Omega notation, and Theta notation come in.

Big O, Omega, and Theta

Big O notation, Omega notation, and Theta notation are used to describe the time complexity of algorithms. Time complexity, or "running time", refers to the amount of time it takes for an algorithm to "run" or complete a task as the size of the input data increases. By understanding time complexity developers can make informed decisions about which algorithm to use for a particular task.

"Wave of the Hand" Approximations

You'll notice there are no smaller order terms referenced in any of the notations. This is a sort of formalized imprecision. When considering algorithmic efficiency developers don't really care about the exact details. These notations are not meant to describe the exact amount of time or steps some algorithm will take, but rather what its behavior will look like as we approach the infinite limit of data input. Its asymptotic behavior. The shape of its output curve - be it constant, linear, quadratic, cubic, and so on, as n gets really large, is what matters.

In this context, a "wave of the hand" approximation provides sufficient information to make informed decisions. All a developer needs is a rough sense of how fast or slow an algorithm will be and that's what these notations are used for. After all, computer performance tends to vary widely from machine to machine and increase over time. Therefore it wouldn't really make sense to compare precise numbers.

Big O Notation

Big O notation is used to describe the upper bound of an algorithm's time complexity. That is to say, it provides an estimate of the worst-case scenario for how long an algorithm will take to complete a task. If every single step of the algorithm needs to be taken, what would that look like? This is why Big O is often the primary consideration when it comes to algorithms, as the worst-case scenario is typically a more telling concern than the absolute best-case.

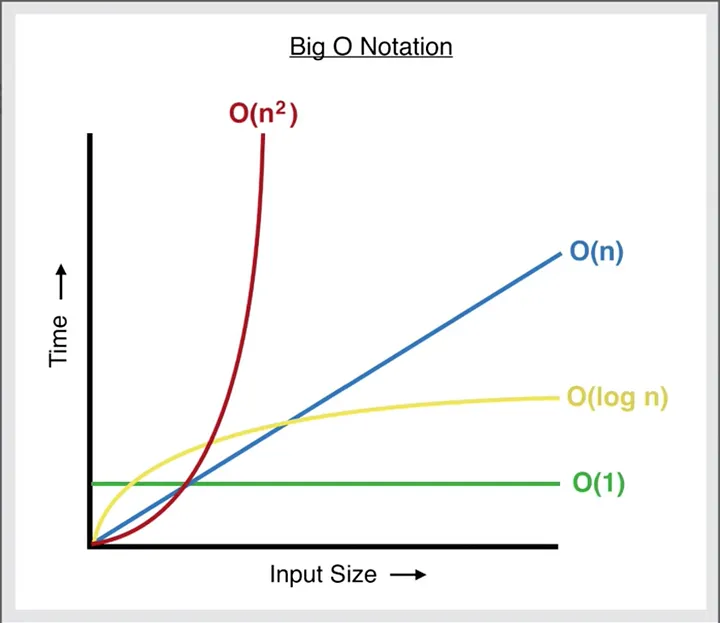

Big O notation is expressed using the capital letter "O" followed by a function. The function describes the growth rate of the algorithm as the input size increases. For example, an algorithm with a time complexity of O(n) means that the time it takes to complete the task will increase in a linear fashion with the size of the input data. This would be described as being "on the order of n steps".

Big O Time Complexity Graph. Credit: Huang,

D.

Big O Time Complexity Graph. Credit: Huang,

D.

Big Omega Notation

Omega notation is used to describe the lower bound of the time complexity of an algorithm. It provides an estimate of the best-case scenario for how long an algorithm will take to complete a task. Say you had an algorithm you wanted to use for searching an array and finding a specific value (assuming it exists). Then, if you execute the algorithm on a given array, it gets lucky and happens to find the value in the very first index searched. What would that look like? Clearly, that would be the most ideal case for the algorithm in that scenario. This would be considered "Omega of 1", aka "constant-time". Because in this example no matter how large the input array was, the "best-case" scenario would always be having found what you were looking for on the very first step taken.

Omega notation is expressed using the capital greek letter "Ω" followed by a function (typically the same functions considered with Big O: constant, linear, quadratic, logarithmic, and so on). The corresponding functions describe the lower-bound behavior of the algorithm as the input size n increases. As the data set grows, what effect will that have on the best case? An algorithm with a time complexity of Ω(n) means that the time it takes to complete the task will increase at least linearly with the size of the input data. The functions denoted, and corresponding output curves considered with Omega, are the same as those considered with Big O. It is important to consider both Big O and Big Omega to see how an algorithm will perform at its worst and best-case scenarios.

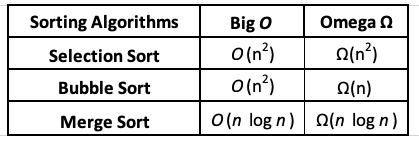

Algorithms can have differing Big O and Big Omega behavior, or the same. If an algorithm has identical upper-bound and lower-bound approximations, we can use "Theta notation".

Time Complexities of 3 sorting algorithms.

Time Complexities of 3 sorting algorithms. Which do you think performs most efficiently?

Big Theta Notation

Theta notation, expressed using the capital greek letter "Θ" followed by a function, applies when both the Big O and Big Omega approximations are the same and is used to describe both the upper and lower bounds of an algorithm's time complexity. It represents the tightest bound for an algorithm's time complexity as it describes how long an algorithm will take to run in both the best-case and worst-case scenarios, given a certain input size. In the table above this is the case for both the "Selection Sort" and "Merge Sort" algorithms. Hence, we could describe each of those with Theta; Selection-sort being on the order of "Theta of n squared" steps (quadratic), and Merge-sort being on the order of "Theta of n log n" steps (logarithmic). Comparing these two, which is better? For large data-sets, Merge-sort drastically outperforms Selection-sort (and Bubble-sort, too) as it can handle ever larger data sets with far less drastic increases to its overall running time.

Final Thoughts

In summary, Big O notation, Omega notation, and Theta notation are used in computer science to describe the time complexity of algorithms. Big O notation describes the upper bound of an algorithm's time complexity, estimating the worst-case scenario. Big Omega notation describes the lower bound time complexity, estimating the best-case scenario. And finally, Big Theta notation can describe both the upper and lower bounds of time complexity in situations where the upper and lower-bound behaviors are the same. By understanding these notations, developers can choose the most efficient algorithm for a given task and optimize their programs to run as efficiently as possible.

Thanks for Reading! :)